Why Real User Feedback Matters Less In An AI World

Users are too shortsighted to match the cycle time of second order effects

This newsletter is 100% written by me. No ghostwriters or GPTs.

Happy Sunday and welcome to the Investing in AI newsletter. I’m Rob May, CEO at Nova. I’m also a very active early stage investor so, if you have an interesting AI deal, please send it my way.

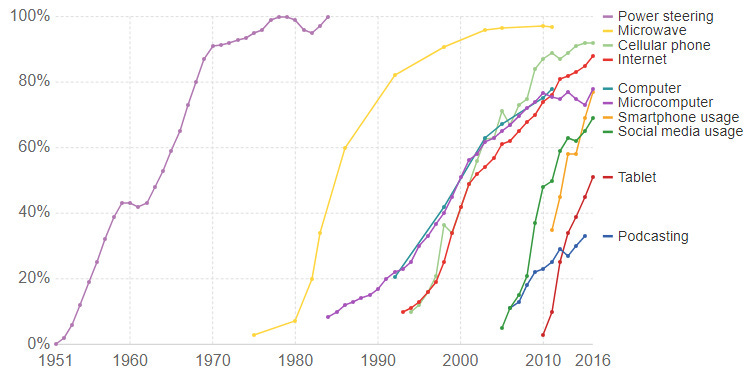

Today I want to talk about this article on the second order effects of AI, and what it means for user feedback in product development cycles. You’ve probably all seen the graph below about the increasing speed of product adoption.

I want to consider what happens to this in a world of AI tools. I’m going to argue that faster adoption, faster development, and the rise of synthetic users all together change the way user feedback will happen for AI companies.

WHAT FASTER ADOPTION CYCLES MEAN FOR AI

Change is coming faster and faster, and as we see from the graph above, the adoption of new technologies is increasing. Every new breakthrough penetrates the market faster than the one before it.

New technologies sometimes take a while to adjust to. But AI is about to bring an avalanche of adjustment opportunities as intelligence permeates every corporate and personal workflow. And many of these changes will create secondary and tertiary effects that will come from the market adaptations to the initial change. As an example, take email marketing. Initially email made it cheaper and easier to keep in touch with friends and family around the world. But it also became easier and cheaper for companies to send messages to people. That led to spam, which led to spam filters, which led to people gaming spam filters, which lead to a new equilibrium where eventually we cared less about email and moved important conversations to other channels like chat or text.

These launch and adaptation cycles were slow enough historically that you could build a business in the time window of one of them. But now those time windows are shrinking. ChatGPT launched about 5 months before this article was published, and already there are waves of impacts: students using it to write homework, tools built to check homework to see if it was ChatGPT generated, and surprisingly deep adoption of this new equilibrium by students, teachers, and schools.

It doesn’t take much math skill to see that if we shrink adoption rates from 4 months to 3 months, to 2 months, to 1 month, to 3 weeks, to 2 weeks… you get the picture. How do you spend time dealing with user feedback on a tool that will be obsolete in months? What about one that could be obsolete in weeks? If you had, say, a user interface that was changing at that rate, user feedback on any one state would be minimally useful. You can’t spend 4 months testing a user interface that won’t even be live for that long before it sees significant changes again.

WHAT FASTER DEVELOPMENT MEANS FOR AI

Let’s roll back to 2005 and the publication of “Four Steps To Epiphany.” That book launched the concepts of customer development into the startup mainstream as more and more entrepreneurs adopted those processes over the ensuing decade. But why was customer development such an effective approach?

The reason it worked so well is because building things was expensive. Following a customer development process meant exposing parts of the process to the user earlier to get feedback on whether the thing you were building was useful or not.

But what happens when AI speeds up development of everything and lowers the cost of development to a fraction of what it was previously? Sometimes I see startups that tell me things like “we interviewed 200 customers before we ever wrote a line of code.” I don’t generally like these startups because I believe you need a bias for action to be successful in this field (and this shows the opposite) but, that aside, if development is fast and cheap, it’s probably better just to build the thing and get real user feedback from the real application instead of doing a bunch of interviews before writing any code.

Customer development was trying to save startups from wasting money. But in a world where AI makes product development cheap and easy, it could be that customer development is the waste.

THE RISE OF SYNTHETIC USERS

One of the things we are learning about LLMs is that they can do a good job of capturing the average view of the world. As a result, companies like Synthetic Users are showing how to use LLMs to generate user feedback. It sounds strange but, the deeper you dig into it the more it makes sense.

In recent weeks I’ve seen companies building synthetic users of all types, for many applications. Like other types of synthetic data use cases, I think it will end up with an 80/20 split. Roughly 80% of your feedback will come from synthetic users and 20% will come from real users.

So what does this all mean when you put it together? If cycles of innovation and adoption are shortening, time to build software is shortening, and feedback can be generated largely by machines and data - how do we handle user feedback in an AI world where these things are true?

I believe the role of user feedback, the way we approach it, and it’s usefulness are all about to change significantly. Generating new ideas will be more valuable than validating the near term status quo. Breadth of experimentation will beat pre-launch customer surveys. And user feedback will be focused more on rapidly validating experiments that trying to synthesize it into a plan for what to start building.

People who work at startups love to quote dumb aphorisms like they somehow prove a point but it’s best to ignore them and remember that success if very situational. Every product and company is a little different, and while some frameworks for decision making are common across them - there’s a lot of nuance. Now that the whole thing is moving faster, your user feedback process needs to adjust to accommodate it. The companies that move their business processes to machine speed are the ones that will win.

Thank you for mentioning Synthetic Users.